A new preprint, “Partisans are more likely to entrench their beliefs in misinformation when political outgroup members fact-check claims,” by Diego Reinero, Elizabeth Harris, Steve Rathje, and Jay Van Bavel (and me), sheds some light on whether fact-checking political misinformation changes beliefs.

The spread of misinformation is a global issue and a topic of lively debate about how to address it. Four in five Americans get news from social media, which makes misinformation a particular threat with real-world implications for distorting people’s decision making about high stakes topics. Worse yet, politicians are often the sources of misinformation, spreading it among their followers.

Thinking in Bets is a reader-supported publication. To receive new posts and support my work, consider becoming a free or paid subscriber.

Saying, “Well, that’s just politics for you,” isn’t necessarily inaccurate, but it’s unenlightening from a decision science perspective. Frankly, it’s also hopelessly unhelpful (not to mention dangerous) as a practical matter. Many of the most pressing issues in our lives are now “political,” whether it’s the response to a global pandemic or a budget impasse that could lead the U.S. to default on its debt.

Aren’t we pretty much doomed if our response to the spread of misinformation is to throw our hands in the air and resignedly say the equivalent of, “It’s Chinatown, Jake.”?

Whether it is through community notes on Twitter or Snopes.com or Politifact, fact checking is the go to strategy for correcting misinformation.

Anyone who has spent any time on Twitter is probably skeptical that fact checks work in correcting political beliefs. It just feels like no one ever changes their mind anymore. Even so, there has been a growing body of evidence that fact checks do reduce belief in misinformation, even if they are less effective for political information (which is highly emotionally and morally activating).

But there is also a conflicting body of evidence that suggests that corrections can backfire, causing individuals to believe misinformation even more after seeing a correction of it. That feels a lot more like what we all experience on social media. No matter how many times misinformation is corrected, it feels like everyone just entrenches in their beliefs.

So which is it? Do people heed corrections of misinformation, or do they rely on partisan identity to guide their beliefs, sometimes backfiring?

To answer that question, we studied whether seeing corrections of political misinformation on Twitter was effective in reducing belief in misinformation, depending on the partisan identity of the source.

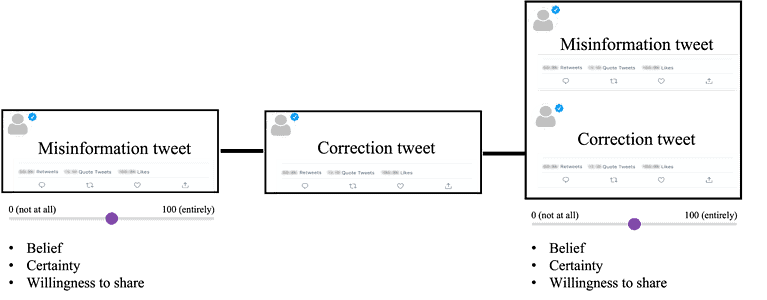

Participants were assessed for their partisan identity (Republican or Democrat) and then shown a misinformation tweet and assessed on the strength of their belief in the misinformation as well as their willingness to share the tweet. They were then shown a correction that came from either a political in-group member, out-group member, or a neutral source and assessed again on the strength of their belief and willingness to share.

In three experiments, we used different sources of misinformation, all politically partisan: famous politicians (like Ted Cruz or AOC), less famous politicians, trusted nonpartisan organizations, and lay partisan and neutral Twitter users.

We found that corrections did, on average, reduce belief on misinformation. The problem was that the effect of the corrections was swamped by partisan identity. Put simply, even if the participants believed the misinformation less after getting corrected, that effect didn’t even come close to overcoming the strength of the initial belief in misinformation from someone of the same political party.

The effect of the misinformation being tweeted by someone of the same political affiliation was five times more powerful than the effect of corrections on belief. People just belief stuff that co-partisans tweet out and, even if they update their belief when corrected, they still end up very far apart from people on the other side of the aisle.

The political divide when it comes to beliefs is real and vast.

Worse yet, even though people do, on average, update their beliefs when they are corrected (good news!), the fact-checks do sometimes backfire (bad news!). This is especially so if the correction comes from someone of the other political party. Fact checks from political out-group members were 52% more likely to backfire.

This explains why some research shows that people are rational belief updaters who change their minds when corrected and other research shows a backfire effect. It turns out that on average people change their beliefs, but that does not mean that everyone changes their mind all the time. Sometimes people backfire and that backfire is predictable by partisan identity.

Not only that, even if people do update their beliefs, the gulf between parties is huge even after the correction.

The good news is that fact check from political in-group members are the most effective way to lead partisans to hold more accurate beliefs.

The problem, of course, is getting in-group members to fact-check misinformation from their own group.

Thinking in Bets is a reader-supported publication. To receive new posts and support my work, consider becoming a free or paid subscriber.